Microsoft announced Copilot for Windows today. Here’s the 60 second snippet to catch you up:

I’ve had a few drafts around this topic for a while now. Today’s Copilot launch feels like the right time to get something—anything—out for the sake of moving on.

So here’s my current thinking on artificial intelligence as it affects the design industry.

On Windows Copilot

‘Copilot’ feels like the right way to describe our relationship to AI as makers. AI tools are the there-if-you-need-them navigator (and soon to be better driver[1]) sitting in the passenger seat of your car.

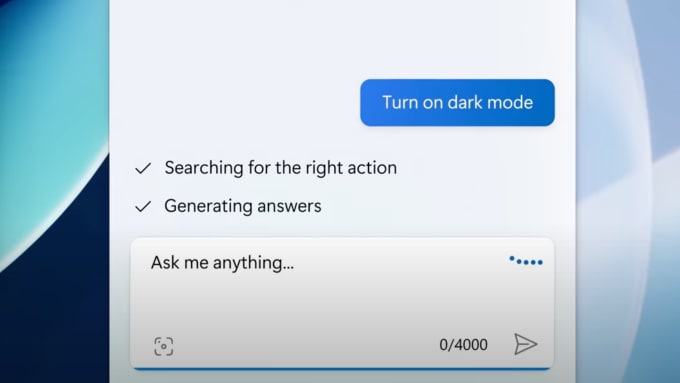

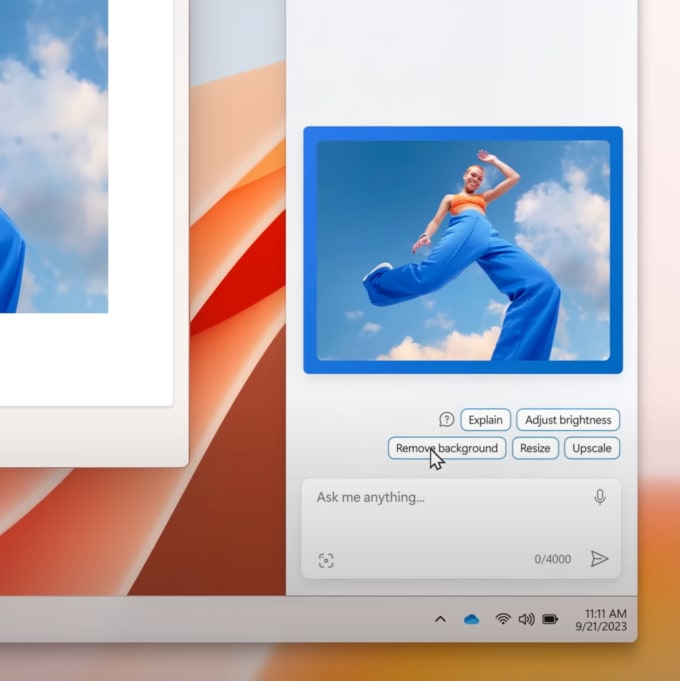

The natural language interactions (dictating and typing out commands) however looks cumbersome for half of the things demoed in the above video: changing colour schemes, window management, and music selection. To me it’s akin to using the terminal to get things done before the invention of the GUI.

Using natural language for higher-level interactions feels right: back-and-forth art direction, conversation, and research. I’d expect this to stick around beyond the invention of the GUI.

More broadly

The invention, maturation, and manipulation of this GUI will form the bulk of design work going forward. Work designing bespoke interfaces directly for consumer products will decrease. Work designing or manipulating interfaces for AI to design those interfaces will increase (for a select few).

In other words: designers will focus on either the designing of these aforementioned tools (technical product design) or focus on ‘prompt engineering’ to turn problems or opportunities into the right inputs (service design). The broader team[2] will absorb and assume the role that designers like myself currently take: composing and iterating on interfaces.

I see this shared role playing out in interactions similar to what Noah Levin shared in this year’s Figma Config conference, just more aggressively (and democratically) than what was demoed onstage:

I’m probably wrong

Most designers or technologists I’ve tested this on disagree with me. The general response is something like “our specific role is too nebulous and difficult to be automated any time soon”. I think that’s a blind spot created by a fear of redundancy[3].

Brad Frost writes that many predictions on this subject (of which I assume mine fits the bill) are “reactive, myopic, and short-sighted”. He also writes the following in response to a bit about AI taking over rote design work:

But it still requires human discretion and decision-making (and don’t forget that important moral compass!) in order to separate the wheat from the chaff.

I’m not sure about that. Look at the case of Alpha Zero’s triumph over Alpha Go[4]; it’s because of a lack of human discretion and design-making that Zero became creative enough to win a notoriously complex game against its well-trained opponent.

We’ll see how this shakes out. And probably pretty soon.

A better driver isn’t necessarily a better future. ↩︎

This seems to be a common response from people in white-collar jobs. I think all these jobs have unhappy endings, but that’s a musing for another time. ↩︎

I suppose this could be any team member with design sensibilities. If a product manager has design sensibilities (as a lot of them do), a designer might come in to tie loose ends. ↩︎

The Demis Hassabis (of DeepMind) episode of the Ezra Klein show explains the Alpha Go and Alpha Zero story well. Skip to about 30–40 minutes in. ↩︎